AndPlus acquired by expert technology adviser and managed service provider, Ensono. Read the full announcement

AndPlus acquired by expert technology adviser and managed service provider, Ensono. Read the full announcement

A basic iOS app which can process photos and make predictions about the contents of the photo.

Machine Learning is a broad area of computer science topics. Essentially the topic can be broken down into the two categories of model training and model implementations. Companies like Apple, Google and IBM are doing their best to make the technology more accessible for developers to leverage and add ML concepts into applications. The Core ML framework, along with the other related frameworks (like Vision), provided by Apple helps to simplify the model implementation side of ML. Core ML and Vision work closely together to process images and make predictions about image contents.

The AndPlus Innovation Lab is where our passion projects take place. Here, we explore promising technologies, cultivate new skills, put novel theories to the test and more — all on our time (not yours).

Core ML is a fairly new framework, introduced with iOS 11 in 2017. The idea is to allow integration of trained machine learning models into an iOS app. Core ML works with regression- and classification-based models, and requires the Core ML model format (models with a .mlmodel file extension). There were some initial misconceptions about how Core ML fit into the grand scheme of machine learning, so understanding that Core ML only worked with pre-trained models took some wind out of our sails about its capabilities. That said, the important thing to remember is that Core ML is still a beta product. Like many initial versions of nearly every Apple product, it is a reduce feature set of what might be capable so that Apple can react to the needs and expand features and functionality as needed. One of the limitations of Core ML is that it only works with pre-trained models with currently no support for additional training of the model. The API does provide a way to download and compile a model on a user's device, allowing for additional model tuning and delivery of updates to users without pushing out a new app version to the store.

It is worth noting that using Core ML also requires the latest of the latest with iOS: Xcode 9.2 and iOS 11. As for languages, Swift 4 seems to be preferred as most examples/tutorials are written in Swift 4. You might be able to even use Objective-C code, but that would likely require a lot more bridging code and probably isn't worth the effort involved. On top of that, Core ML only supports its own model format (.mlmodel) but thankfully Apple provides a few pre-trained models in the proper format as well as conversion tools (Core ML Tools) with additional support for writing your own converter (which exists already for MXNet and TensorFlow).

For our experiment, we utilized the Core ML and Vision frameworks to integrate a pre-trained image classification model into an sample app for making predictions about the contents of a photo.

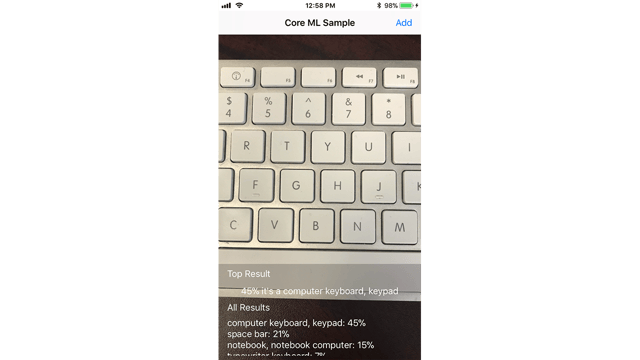

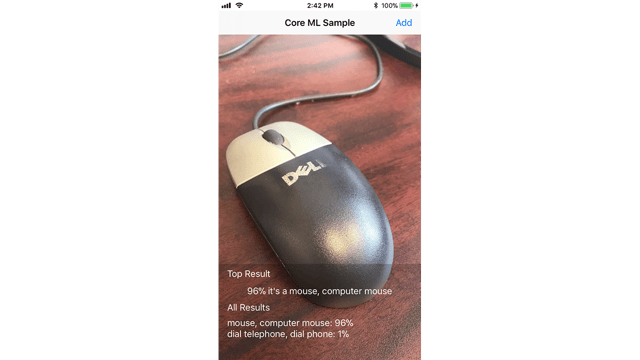

Above are a couple screenshots of the app in action. This sample application shows the 'Top Result' and its confidence percentage in addition to the complete results set with their confidence levels. Different models will yield different results but our main point of experimentation was the simplicity of integrating this into an app.

AndPlus understands the communication between building level devices and mobile devices and this experience allowed them to concentrate more on the UI functions of the project. They have built a custom BACnet MS/TP communication stack for our products and are looking at branching to other communication protocols to meet our market needs. AndPlus continues to drive our product management to excellence, often suggesting more meaningful approaches to complete a task, and offering feedback on UI and Human Interface based on their knowledge from past projects.

or If you don't like forms, email us info@andplus.com

Read the AndPlus ratings and client references on Clutch - the leading data-driven, B2B research, ratings, and reviews firm.

257 Turnpike Road, Southborough, MA

508.425.7533

257 Turnpike Road, Southborough, MA

508.425.7533