Several technologies that are coming to the fore in commercial applications will change the way we develop software, and the uses to which it can be put. While some of these technologies are still at the unstable innovation stage, where it’s unclear whether they have a future or what it will involve, others are making the leap from niche uses and early adoption to the mainstream.

For software developers, then, the challenge is to identify these technologies and figure out how to select them for development projects, based on their actual functionality and applicability. We need to build the expertise to work in an environment characterized by technologies which are now emerging, but which will soon be dominant. And we need to learn how to communicate the reasoning behind our choices to our clients.

What is an emerging technology?

Emerging technologies aren’t always new tech. They might be well-known and established, just in another field. The term can mean radical new technologies, or technologies being used in a radical new way. In general, it’s taken to mean something that will be widely available and effective within five to ten years, and which is likely to significantly change a business or industry.

Rotolo, Hicks, and Martin, in their 2015 paper ‘What is an Emerging Technology?’, arrived at a definition which ‘identifies five attributes that feature in the emergence of novel technologies. These are: (i) radical novelty, (ii) relatively fast growth, (iii) coherence, (iv) prominent impact, and (v) uncertainty and ambiguity.’

Thus, a truly experimental technology that’s years from commercial application — so-called ‘jetpack’ technologies, or innovations that lack the requisite supporting technologies and infrastructure — don’t count as emerging. Obviously, neither do well-established technologies, or obsolete ones; in software development, we couldn’t describe CADES (1970s) or Python (mainstream) as ‘emergent’ — but we could make a case for Wolfram or TensorFlow.

Emerging technology and the adoption lifecycle

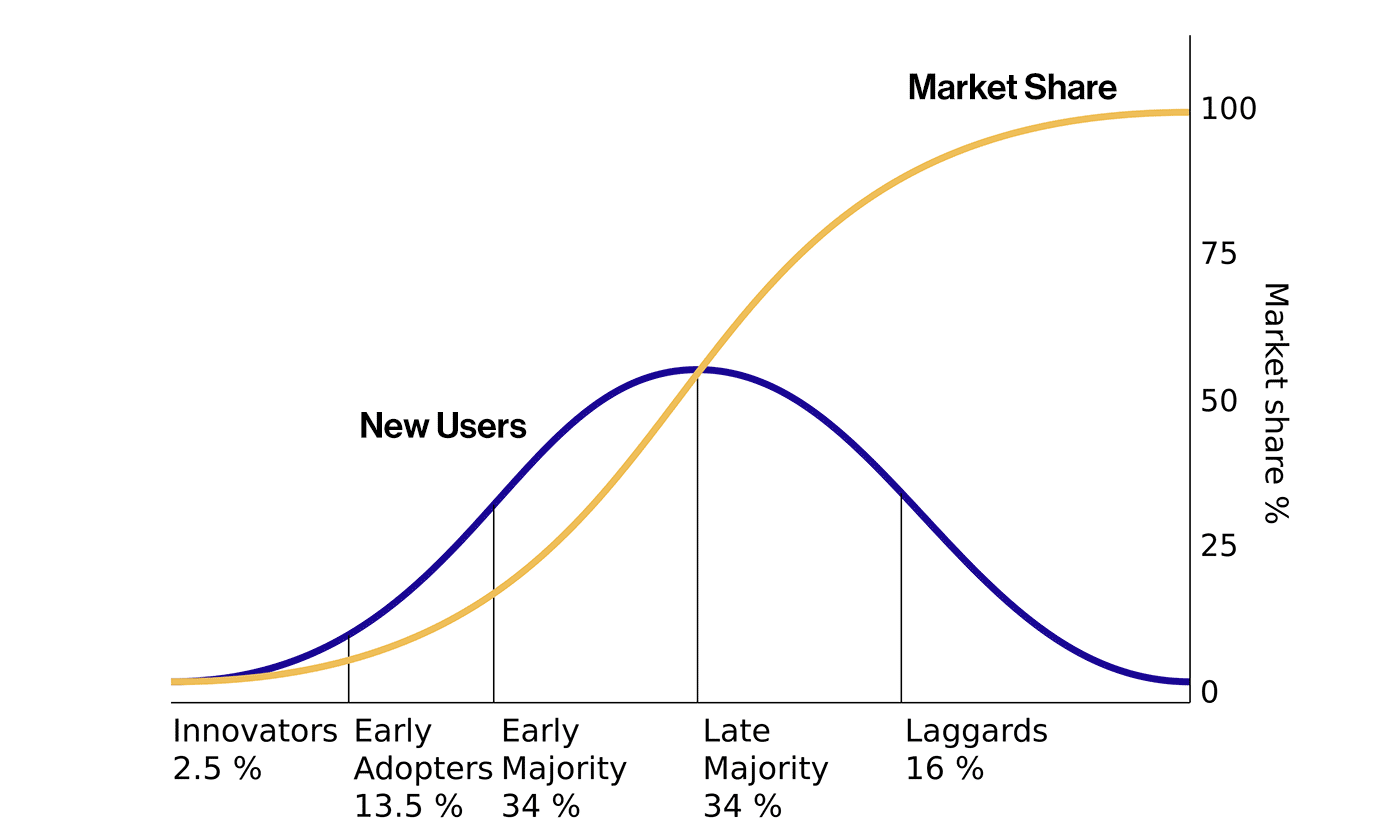

Technology adoption follows a fairly predictable pattern — first enthusiasts and innovators, then early adopters, then the early majority whose weight of numbers indicates likely long-term success.

In this process, there’s a ‘chasm’ between the early adopters and the early majority. This chasm is where experimental technologies go, either to die or to wait until infrastructure and supporting technologies catch up.

So what we don’t want to do is step in before the chasm and bet on emerging technologies that haven’t yet passed this test. No matter how good they are on paper, or what benefits that can allow us to deliver to our clients, these technologies are more experimental than emerging.

The chasm and the trap: selecting emerging technologies on the right basis

We’ve talked about that chasm between early adopters and enthusiasts, and wider, mainstream implementation. That’s the gap between the Sinclair C5 and the Segway; between technology that becomes unobtrusive because it’s ubiquitous and because it disappears completely without a trace.

This chasm can be a trap for unwary adopters of new technologies. Sometimes we can be deceived by what’s known as Shiny Object Syndrome: the irresistible lure of something that looks new and exciting. In other cases, people are convinced on too little evidence that a technology or tool has transformative capability, when in reality it’s in its infancy. The outcome can be dangerous for businesses: money down the drain, time wasted, reputation squandered.

Yet, there are technologies that really do have the potential to transform business and life. You’re using two of them (internet, computing) right now. So the crucial consideration for selecting emerging technologies for software development is actually threefold:

- What does the technology do? Is it appropriate to the needs of the project? This is the same consideration we’d use to match any tool with any project, stage or task.

- How mature is the technology? We want a mix of tried and tested, reliable tools, and options that are new enough to allow us to achieve competitive advantage — without shackling ourselves to a pipe dream.

- How does the technology align with a current strategy or future vision for the company? This speaks to project selection as well as to technology selection. Everything has to align, because where it doesn’t you’re wasting money and effort. This is particularly crucial with emerging technologies, whose major selling point is their transformative capacity.

There’s a group of emergent technologies that are becoming increasingly relevant to software development, opening new possibilities without excessive risk. We’ll look at those, and then talk about how to select emerging technologies for software development projects.

Emerging technologies to watch out for

These technologies are entering real commercial use, and as they change how we utilize software and transfigure the world around us, they’ll also become the ‘new normal’ for which we write code.

Blockchain

Blockchains don’t have to just support cryptocurrencies. While many are now being used for other financialized economic activities like DeFi and NFTs, the blockchain offers a platform that is both distributed and secure. Thus, it’s attracting attention from everyone from supply chain managers to lawyers, aid agencies to IoT and manufacturing.

As more general-purpose blockchain projects emerge, there’s more scope for non-crypto blockchain applications; after a raft of poorly-received projects over the last few years, there’s proof-of-concept in the Ethereum multipurpose chain and multiple private blockchains. There’s also the about-to-launch Polkadot blockchain ecosystem which will allow users to build their own applications using mainstream languages rather than Solidity.

AI and ML

Artificial intelligence and machine learning have both had their roles reduced as ‘general AI’ has failed to materialize, and many projects touting AI involvement floundered. But now, predictable use cases and clear areas of competence for AI mean that ‘if you have a narrowly defined task that involves recognizing a small set of patterns in data,’ AI applications are a serious business.

We can expect to see applications with a small, predictable range of inputs regardless of volume move over to AI first. This means burgeoning demand for AI chatbots in customer service, expected to account for 95% of customer interactions by 2025, as well as industrial and logistical applications. Overall, AI is expected to reach a forecast of $22.6 billion by 2025.

AR

Augmented reality is a jetpack whose time has come. After years of clumsy attempts, heavy headsets and cumbersome wires, the supporting technology is in place to make limited but functional AR a reality. Just ask any of Pokemon Go’s 166 million users.

Currently, AR is smartphone-based for the most part, and one of its most directly useful applications is AR-specific browsers such as Argon4, enabling information about their surroundings to be embedded in users’ views of their surroundings.

Digital Twinning

A digital twin is a digitized representation or copy (‘twin’) of a real process or structure. Rather than a virtual representation or model, a digital twin is constructed from live data from sensors on the real structure, and offers real-time executive control too.

The process is already used to monitor crucial, complex systems such as aircraft engines in real-time, and its applications for IoT are obvious.

As with many software-oriented innovations, its first use was in government programs, in this case, NASA. For obvious reasons, live testing environments are difficult to access for spacecraft, so digital twinning was used instead.

In 2017, Gartner named digital twinning its fifth most important strategic technology trend. As technological barriers to digital twinning fall, the company expects that by the end of 2021 half of all large industrial companies will be using digital twins.

Containerization

Containerization is the core technology of all cloud computing, offering increased security and flexibility. Traditionally, containerization was done through Dockers, but the change to Kubernetes is already underway; a hybrid implementation may suit some applications.

Containerization promises to allow developers to ‘code once, run anywhere’ and is particularly suitable for edge computing with its emphasis on light, low-footprint implementations. Currently, about 8% of enterprise-generated data is processed outside a traditional data center or cloud; Garter predicts that by 2025, this figure will be 75% or more. To achieve this shift, containerization is the vital first step.

Computing at the edge of the cloud

Cloud computing seems to have taken over the world, but in fact, has only taken its first steps. Forrester predicts cloud computing will grow 35% in 2021 alone, as businesses move to a more reliable, secure and distributed data model.

As this happens, computing will paradoxically become both much more centralized and much more decentralized. Edge computing will rise, moving maximum computation to the edge of the cloud to reduce lag. Meanwhile, cloud-based data management and processing will create ‘cloud cores’ with huge troves of non-time sensitive data processed centrally.

Selecting emerging technologies for your project

What all these technologies have in common is that each is unlikely to be utilized alone. Instead, we can expect to see large, multifaceted development projects that involve IoT manufacturing or processing, with some AI for local decision-making and yet more for centralized data analysis and planning.

Some transactional or highly-secure data will be on blockchain; we just don’t know how much. Some work will be done through AR. A small group of individuals, physically distributed, using AR to supervise the digital twin of an IoT factory is a future that’s closer than we think. The value of these emergent technologies will be accessed synergistically, so we need to learn to develop for them with that in mind.

Selecting the appropriate technology set for a project will take the same shape as all client interactions: some clients will already know what they want and be right, while others in the same position will have to be educated out of their enthusiasms.

In many cases, clients will know what they want to achieve but not how to get it, so it will fall to us to match technologies with aspects of the multi-platform flows required to take best advantage of distributed, sometimes-decentralized software applications.

Takeaways

- Emerging technologies are set to change the way major industries like logistics and manufacturing operations function, so we need to be ready to meet their burgeoning software demands.

- It’s the task of developers and software engineers to match technology stacks with projects; stacks, rather than individual solutions, will be standard, as many of these technologies depend on each other for value.

- The process of developing for these technologies will be a question of learning new libraries and new applications — robotics or conversations, say — but probably not new languages. The trend is to make development easier by enabling development with existing languages

- Much of the work will involve educating clients about the disparate elements of the new tech stack.