Before you release software, you need to know whether it’s good enough. Software quality testing lets you know for sure that it’s ready to ship. It also gives you data and insights you can feed back upstream to fine-tune your processes, shorten and accelerate development cycles, and improve engineer and team performance.

Measurement by itself doesn’t improve anything. However, knowing if your code is flawed can stop you from damaging your reputation by shipping a buggy product. Having a solid quality testing structure in place lets you ‘learn to the test,’ developing with quality testing in mind from day one. You should be able to use your quality testing metrics to:

- Predict defects

- Discover and fix bugs

- Increase development efficiency

- Improve productivity

In this post, we’ll discuss some common approaches to quality testing, then take a more in-depth look at some of the most robust and adaptable approaches. We’ll start with an intro to software quality testing, then an overview of the most common software quality testing models.

Software quality: what are we testing?

Software quality testing measures whether software meets requirements, which can be either functional or non-functional.

Functional requirements

Functional requirements specify what software should do. That might be calculations, technical details, data manipulation, or anything else that an application has been designed to accomplish.

Non-functional requirements

Non-functional requirements specify how the software should work. These are also known as ‘quality attributes,’ and they’re what we’ll be talking about in this post. They include things like recoverability, security, supportability and usability.

Think of software as a black box. Functional requirements measure what comes out of it. Non-functional requirements measure what happens inside it. This post is about the inside of the box.

Software quality testing models

There are several approaches to understanding software quality. Among the most comprehensive is the McCall’s model.

McCall’s quality factor model

McCall’s identifies 11 quality factors grouped under three headings:

Product operation factors

- Correctness

- Reliability

- Efficiency

- Integrity

- Usability

Product revision factors

- Maintainability

- Flexibility

- Testability

Product transition factors

- Portability

- Reusability

- Interoperability

This model is sometimes referred to as the ‘quality triangle.’

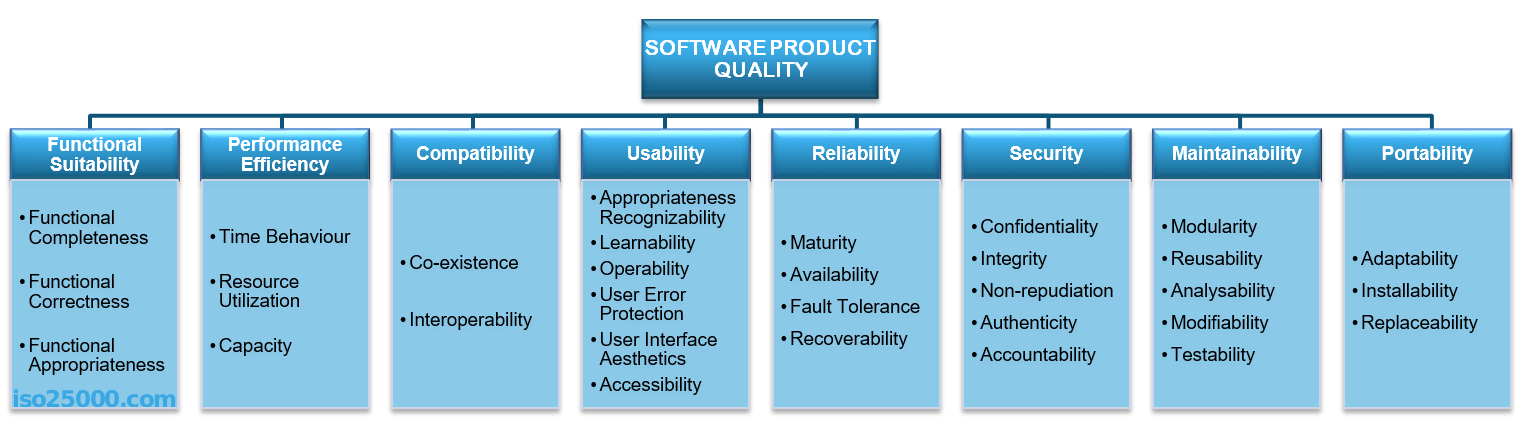

ISO/IEC 9126 and ISO/IEC 25010-25011

The ISO quality models are even more detailed. This overview will be brief.

ISO/IEC 9126 was an international set of quality standards which can be viewed here. It has now been superseded by ISO/IEC 25010-25011.

ISO/IEC 25010-25011 uses eight domains of quality:

- Functional stability

- Performance efficiency

- Compatibility

- Usability

- Reliability

- Security

- Maintainability

- Portability

Three to five factors are considered under each heading. ISO/IEC 25010 is designed to be comprehensive. However, one of the problems with these models is that they’re too highly detailed and restrictive.

CISQ

CISQ stands for Consortium for Information and Software Quality, and proposes a set of standards that addresses software quality at the source code level, focusing on four factors:

1. Reliability

Reliability is the stability of a program when exposed to unexpected conditions. You’re looking for minimal downtime, good data integrity, and no errors that directly affect users.

Common forms of reliability testing include:

Feature testing

Feature testing checks that the features offered by the application work as expected.

- Each operation is executed once

- Interaction between operations is reduced as much as possible

- Each operation is checked to see that it operates as expected

Load testing

Load testing checks the performance of an application under increasing load, until a load is found that causes the software to fail or its response time to degrade unacceptably. A web application might be tested by adding users, or a data management application by adding data streams. (Load testing is often used to measure performance efficiency too.)

Regression testing

Regression testing checks if new bugs have been introduced in recently-added code, including material written to address previous bugs. Regression testing should be performed after every code update.

Steady-state and growth testing

Experts run the application in a simulated operational environment similar to what’s anticipated in production, then gradually change variables like number of users, throughput and so on to test how stable the application is.

Average failure rate

Average failure rate measures the average number of failures in a given period per user or deployed unit.

Mean time between failures

This metric gives you the average (mean) uptime, measured from one major failure to the next.

2. Performance efficiency

Performance efficiency is an application’s resource usage relative to its goals, with reference to how it affects scalability, customer satisfaction, and response time. Software architecture, source code design, the design of individual components, and interdependencies all affect efficiency.

Common ways to test software efficiency include load testing, as mentioned above, as well as:

Infrastructure testing

Testing processes, features, and the whole application across different infrastructures — in the case of a native desktop application, this would be different computers — then using those devices’ resource management tools to identify the application’s live resource usage.

Stress testing

Testing the system to failure along the axes of different variables — users it can handle, files it can retrieve in a given period, and so on — before it fails or its performance degrades.

Soak testing

Checking to see if the system can handle a given load for a specified, long period of time. It might be able to run with 10,000 users, but can it do so for a week, or just a few hours?

Application performance monitoring (APM)

APM provides detailed performance metrics from the user’s perspective in real time, views traces and logs, follows requests through the system, and more. This service is usually provided via third-party APM applications.

3. Security

Security is how well an application protects its information against breaches and losses. This includes data piped in from other applications, data at rest in databases and data in transit. Poor coding and insecure architecture often contribute to software vulnerabilities.

Commonly-used security testing techniques include:

Authentication and authorization testing

Testers create dummy accounts with all the permission types available in the application, then see if they can access all, and only, the spaces within the application appropriate to those permissions.

Data security

Data security testing checks that only those with appropriate permissions can access application data at rest or in transit. This includes testing to ensure that data is properly encrypted.

Simulated attacks

At the very least, testers should simulate brute-force attacks and verify that the risks from such attacks are mitigated by appropriate security measures. For example, it should be impossible to find a valid ID, and then make unlimited login attempts to brute-force the password. Testers should also check to see that security measures against attacks like SQL injections and cross-site scripting are in place and working.

Error handling

Applications should be tested to check how they behave when user actions cause them to throw up errors like 404s, 400s, and 408s.

Number of vulnerabilities

Scans can reveal known vulnerabilities identified from other software that uses the same libraries or architecture. The number of vulnerabilities in your application provides a baseline negative measure of security.

Security logs

Number of real incidents, severity, total time of attacks, downtime and losses. For deployed software, part of the story can come from real security data acquired in the field.

Time to resolution

How long does it take for a vulnerability to be addressed satisfactorily after it has been identified?

Payments and uploads

These are the two riskiest actions on a web application. Can they be done securely, safely, and easily?

4. Maintainability

Maintainability is how easy it is to modify software, adapt it for different purposes, or transfer it between one development team and another. If a new developer can sit down, look at the code, and immediately understand its purpose and function, the software is highly maintainable. Inconsistent coding and poor compliance with architecture best practices tend to produce code which is difficult and expensive to maintain.

Maintainability testing involves testing the code, not the running application. Common tests include:

Static code analysis

Automated code examinations can identify problems and ensure code adheres to industry standards. This is done on the static code without running the software, looking for:

- Development standards - Are standards adhered to for structured programming, database approach, nomenclature and human readability, and user interfaces?

- Implementation - Have input, processing and output been implemented separately?

- Parameters - Have application elements been parameterized to promote reusability?

Software complexity metrics

More complex code is generally less maintainable code, so metrics of complexity like cyclomatic complexity and N-node complexity flag code that should be simplified if possible.

Lines of code

As simple as it gets. More lines of code equals more complex, less maintainable code that’s more prone to quality issues.

Why CISQ+?

Simplicity and open-endedness make CISQ a popular choice. In practice, most companies use ‘CISQ+’, which is just CISQ, plus whatever else they need from the McCall or ISO lists, typically including:

Rate of delivery

This measures how often new versions of an application are shipped to customers. New versions typically come with improvements across the board like new features, patched security, bugs addressed, UI updates. So higher rates of delivery typically correlate with improved customer satisfaction.

Testability

More testable software is usually better software, because it’s easier to identify and address problems. Clearly-defined quality metrics, matched with software designed to be tested against those metrics, should equal higher quality software in production.

Usability

User testing is slightly outside the scope of this post in that it also addresses UX design and other factors. However, two of the biggest concerns to users are simplicity and task execution speed, which definitely can be tested for before software ships.

How AndPlus can help

We’ve been at the forefront of testing and quality assurance from the start. We insist on improving our processes so our outcomes get better, then work on those too. We test our code, our applications, and our development methodology, then make changes based on the data. That’s why we recommend a multi-year roadmap for software development, even though our development cycles are typically quite short. It’s why we start with a product map that involves in-depth consultancy with clients, so we know what we’re aiming for before we start; that’s our definition of quality. And it’s why we bring specific skill sets to projects like redesigns and first-stage launches.

Takeaways

- Quality testing doesn’t have to be excessively complex, and focus on the most important aspects to users can yield dividends.

- It’s important to seek to improve development processes as well as software quality.

- Deciding exactly what you mean by ‘quality’ and putting numbers to it makes it easier and more impactful to measure, track, and improve.

- Testing is simple, inexpensive and easy — compared to serious software flaws, downtime and breaches.